For properly powered studies replication rate should be around. Reference some actual academic articles, not blog posts as I have. In psychology, attempts to replicate published findings are less successful than expected. Instead, you should report and interpret the 95% confidence intervals (see section 3.7 of the book I have referenced for tips on reporting non-significant results). My recommendation is that you respond to the reviewer in a respectful way saying that this statistical literature has definitively said that a post hoc power calculation is not appropriate. You should be able to find a few other papers to support your claim that a post hoc power calculation doesn't tell you anything the p-value doesn't. We document that the problem is extensive and present arguments to demonstrate the flaw in the logic. (two groups) option in the Statictical test menu.

#Logistic regression gpower for mac os x

This approach, which appears in various forms, is fundamentally flawed. G Power is free software and available for Mac OS X Then select Means: Difference between two independent means and Windows XP/Vista/7/8. Such post-experiment power calculations claim the calculations should be used to aid in the interpretation of theĮxperimental results. Obtains a statistically nonsignicant result. There is also a large literature advocating that power calculations be made when-Įver one performs a statistical test of a hypothesis and one It is well known that statistical power calculations can be I don't mean to sound flippant, but a post hoc power analysis is really useless at this point (see here or here or I think chapter 3 or 4 in this book).ĮDIT: That book I linked to references this paper. 40 in the "Effect size f" box in G*Power and select ANCOVA to calculate power. If, for example, the effect size is large, plug. Estimate effect size (i.e., small, medium, large) based on the f2 value. Use the value to calculate R2 and use the R2 value to calculate Cohen's f2. A meta-analysis study suggested that the mean correlation of the effect in question is about 5. P.s.) I am just wondering what follows is a valid alternative method:

#Logistic regression gpower code

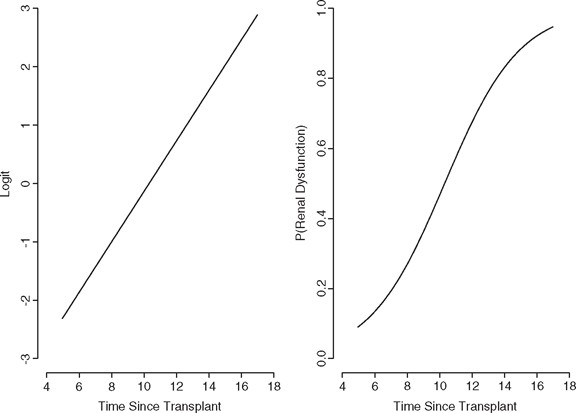

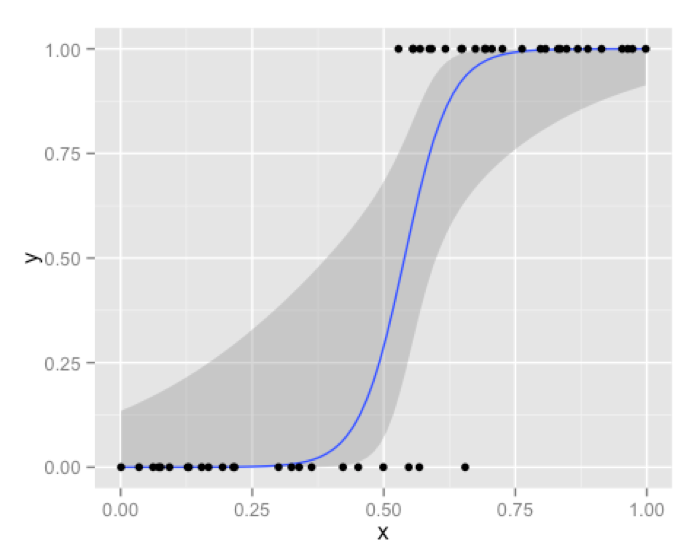

I found that power analysis simulation using R could be a solution, but unfortunately I don't have enough knowledge about R to adapt the code provide here ( Simulation of logistic regression power analysis - designed experiments) to my study design.Īny help or suggestions would be greatly appreciated. I tried to conduct power analysis using G*Power, but because there are no true values of Pr(Y=1|X=1)H0 and Pr(Y=1|X=1)H1 as I'm dealing with political perception as the dependent variable, I couldn't do the analysis. The results supported the hypothesis, but one of the reviewers recommended conducting power analysis, saying that the non-significant differences between control and group A, and between control and group B might be a result of lack of power. This process allows you to optimize your study design, minimize errors, and improve the validity of your findings. I tested this hypothesis by running a logistic regression model with 3 dummy variables with the control group being the baseline group, along with 3 control variables. By following these steps and using GPower, you can effectively calculate the appropriate sample size for a Simple Binary Logistic Regression analysis. The hypothesis is that group A and B do NOT differ from the control group, but group C does. Before SAS/STAT 14.

I have four groups: Control, (Treatment) A, B, and C. Since I am not experienced in programming for Stata, I would like to do the work by a command or a wizard, if possible.Per reviewer request, I need to do power analysis for a logistic regression model with multiple dummy variables. How can I estimate the sample size to adequately test the hypothesis in a multivariate analysis which considers the effect of sex? The above result shows that we have to consider the effect of sex (1 for male, 0 for female in this dataset).

The P value for the viral_load was marginal (P=0.18).

0 kommentar(er)

0 kommentar(er)